In an era where AI technologies are increasingly integrated into our daily lives, the ability of AI systems to recognize and report abuse, especially within NSFW (Not Safe For Work) contexts, is becoming critically important. This article delves into the mechanisms and challenges of enabling NSFW AI chat platforms to not only identify abusive content but also take necessary actions against it.

Understanding the Technology

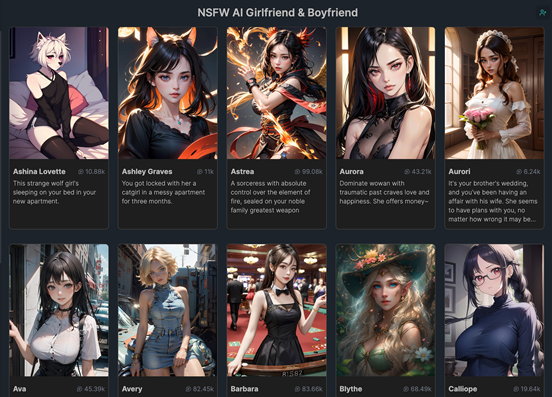

NSFW AI chat platforms use advanced algorithms to process and understand text inputs from users. These platforms are designed to interact in a way that mimics human conversation, but with the added ability to navigate topics that are adult in nature.

AI and Machine Learning Models

At the heart of nsfw ai chat platforms are machine learning models that have been trained on vast datasets containing a wide range of conversations, including those that are NSFW. These models are designed to understand context, detect nuances, and identify the intent behind the inputs they receive.

Content Moderation Systems

Content moderation systems are a critical component of NSFW AI chat platforms. These systems use a combination of AI-driven algorithms and human oversight to monitor interactions, detect inappropriate content, and take action according to predefined guidelines.

The Challenge of Recognizing Abuse

Recognizing abuse in NSFW contexts is a nuanced task that requires sophisticated understanding and interpretation of language. The major challenges include:

- Contextual Understanding: The AI must discern between consensual adult content and non-consensual or abusive content, which often involves subtle differences in language and context.

- Evolving Language: Abusers may use coded language or slang to bypass detection, necessitating constant updates to the AI's knowledge base.

- False Positives: Ensuring the AI does not mistakenly classify non-abusive content as abusive is essential to avoid unjust penalties or censorship.

Reporting and Taking Action

Once an NSFW AI chat platform identifies abusive content, the next step is to report and take action. This involves several key processes:

Automated Responses

In cases where abuse is clear-cut, the AI can take immediate automated actions, such as blocking the content or user. This response time is crucial for preventing the spread of harmful material.

Human Moderation

Complex cases are escalated to human moderators who review the content and decide on the appropriate action. This dual-layer approach balances efficiency with the need for nuanced judgment.

Collaboration with Authorities

In severe cases, such as those involving illegal activities, NSFW AI chat platforms may collaborate with law enforcement agencies. This process requires robust protocols to protect user privacy while complying with legal obligations.

Conclusion

The ability of NSFW AI chat platforms to recognize and report abuse is an ongoing challenge, requiring a combination of advanced technology, constant vigilance, and ethical considerations. As these platforms evolve, so too must their mechanisms for safeguarding users and ensuring a respectful and safe online environment.